What's this about ?

Game theory is where the decisions we make are not just about winning or losing but can shape the very fabric of our lives. Let's discuss the most famous problem in game theory, a puzzle that transcends from global conflicts like the cold war to the dynamics of everyday choices. Figuring out the best strategy can be the key difference between life and death, war and peace.

The Birth of The Prisoner's Dilemma - The Cold War

September 3, 1949

Imagine a world on the brink of a nuclear era, where each air sample carries whispers of international tension - radioactivity. On that fateful day, an American weather monitoring plane soared over Japan, unwittingly collecting more than atmospheric data—it gathered the echoes of a brewing conflict.

In those air samples lay the ominous traces of radioactive material, sparking a frantic investigation by the US Navy. Cerium-141 and Yttrium-91, with their fleeting half-lives, hinted at a recent nuclear explosion. The unsettling truth? The Soviet Union might have mastered the art of creating a nuclear bomb.

The implications were staggering. The Manhattan Project's military supremacy was slipping through American fingers, and a sense of urgency gripped the nation. The problem at hand was not just about geopolitics; it was a puzzle threatening the very fabric of global security.

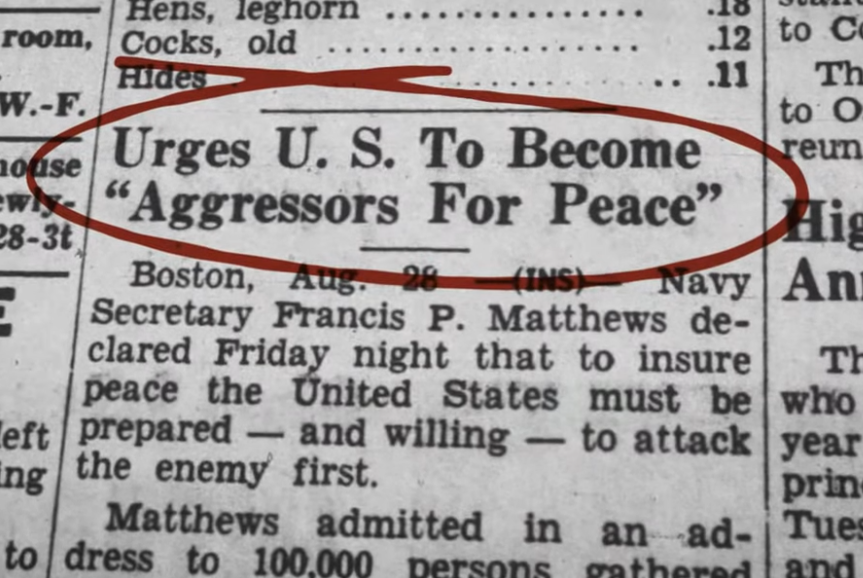

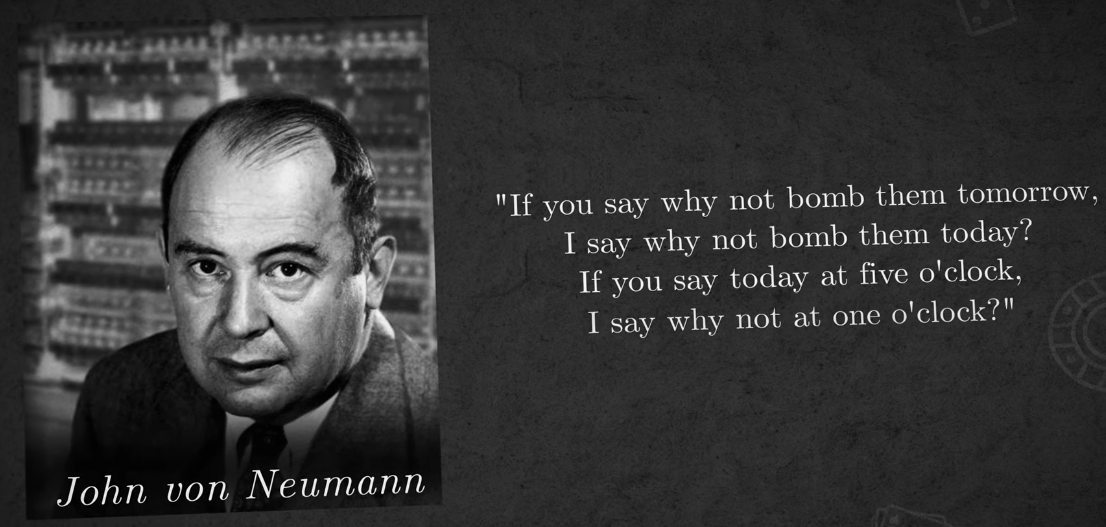

Navy Secretary Matthews, contemplating an unprovoked nuclear strike, declared the need to be aggressors for peace. Amidst the ominous shadows of potential war, a visionary named John von Neuman, founder of game theory, posed a profound question.

This dilemma birthed a quest for solutions, leading the RAND Corporation, a US-based think tank, to delve into the realm of game theory in 1950. Little did they know that within the corridors of mathematical abstraction, a game was being forged—one that would mirror the unfolding drama of the US-Soviet conflict. Unbeknownst to its creators, this game would go on to be recognized as the infamous prisoner's dilemma.

Let's Play a game

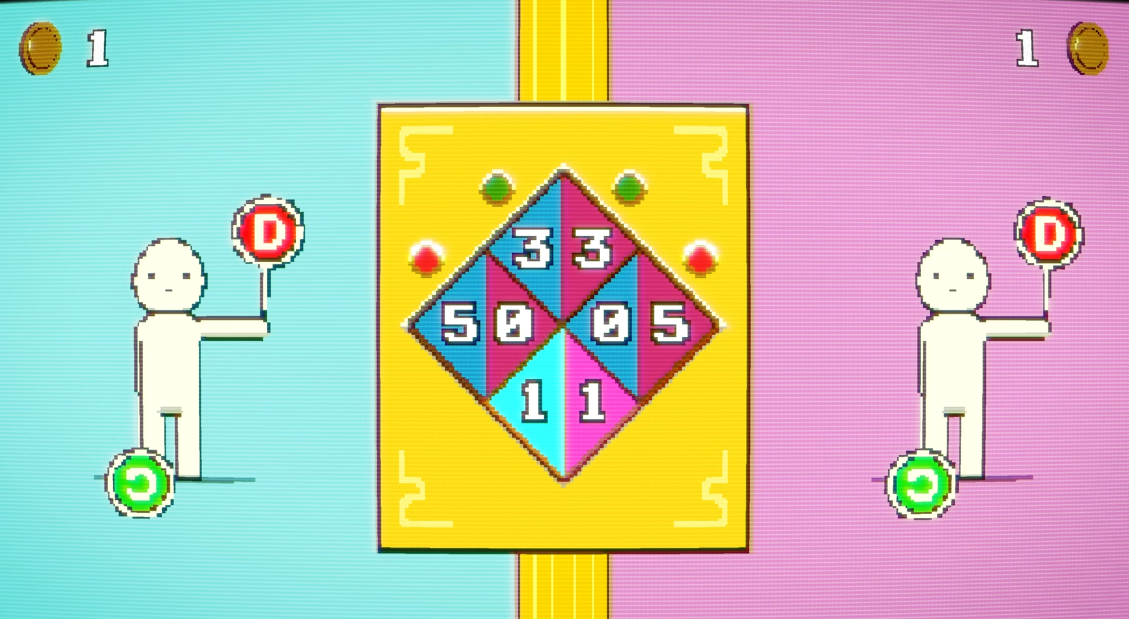

A banker with a chest full of gold coins invites you and another player to play against each other. You each get two choices. You can cooperate or you can defect.

If you both cooperate, you each get three coins.

If one of you cooperates, but the other defects, then the one who defected gets five coins and the other gets nothing.

If you both defect, then you each get a coin.

The goal of the game is simple: to get as many coins as you can.

So what would you do?

Suppose your opponent cooperates, then you could also cooperate and get three coins or you could defect and get five coins instead. So you are better off defecting.

But what if your opponent defects ? Well, you could cooperate and get no coins or you could defect and at least get one coin.

So no matter what your opponent does, your best option is always to defect. Now, if your opponent is also rational, they will reach the same conclusion and therefore also defect. As a result, when you both act rationally, you both end up in the suboptimal situation getting one coin each when you could have gotten three instead.

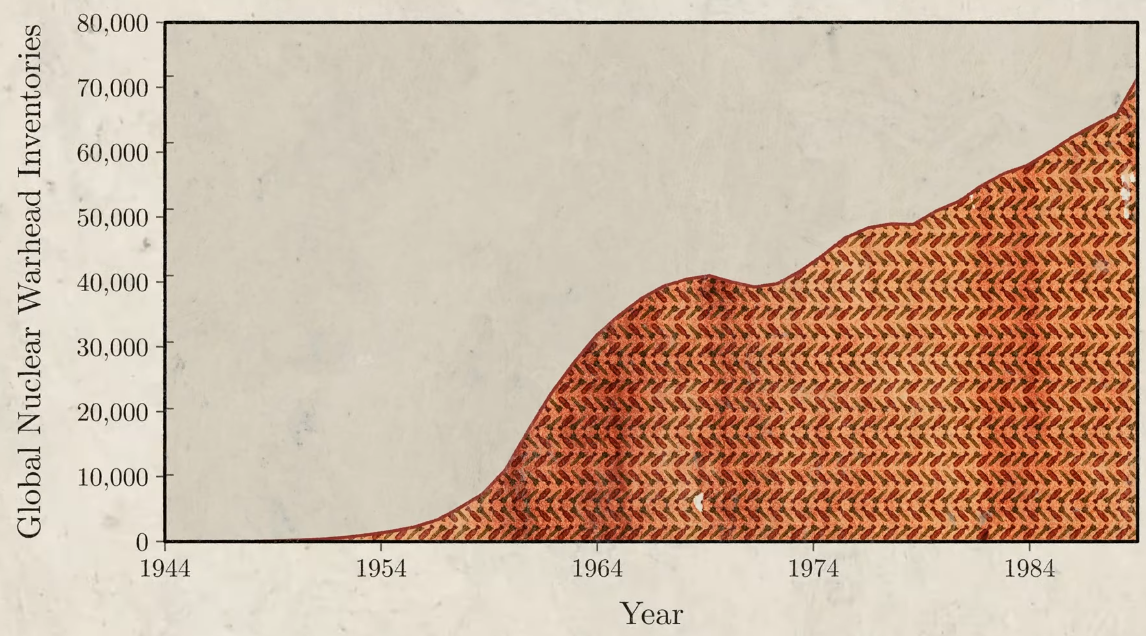

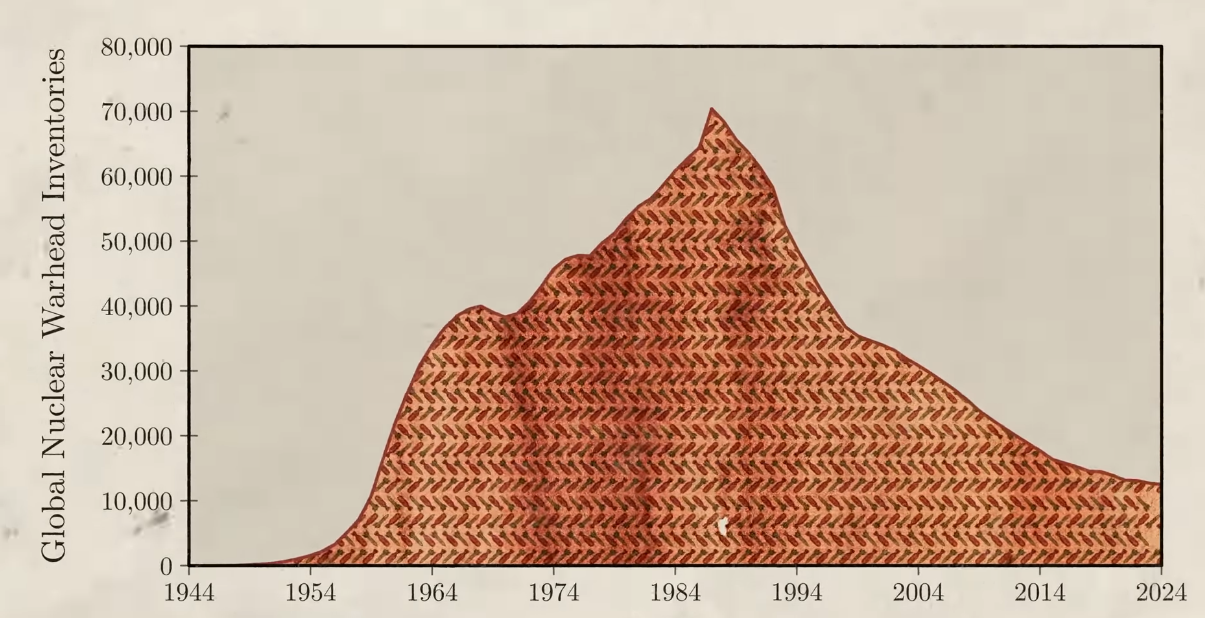

In the case of the US and Soviet Union, this led both countries to develop huge nuclear arsenals of tens of thousands of nuclear weapons each, more than enough to destroy each other many times over. But since both countries had nukes, neither could use them. And both countries spent around $10 trillion (adjusted for today's inflation rate) developing these weapons.

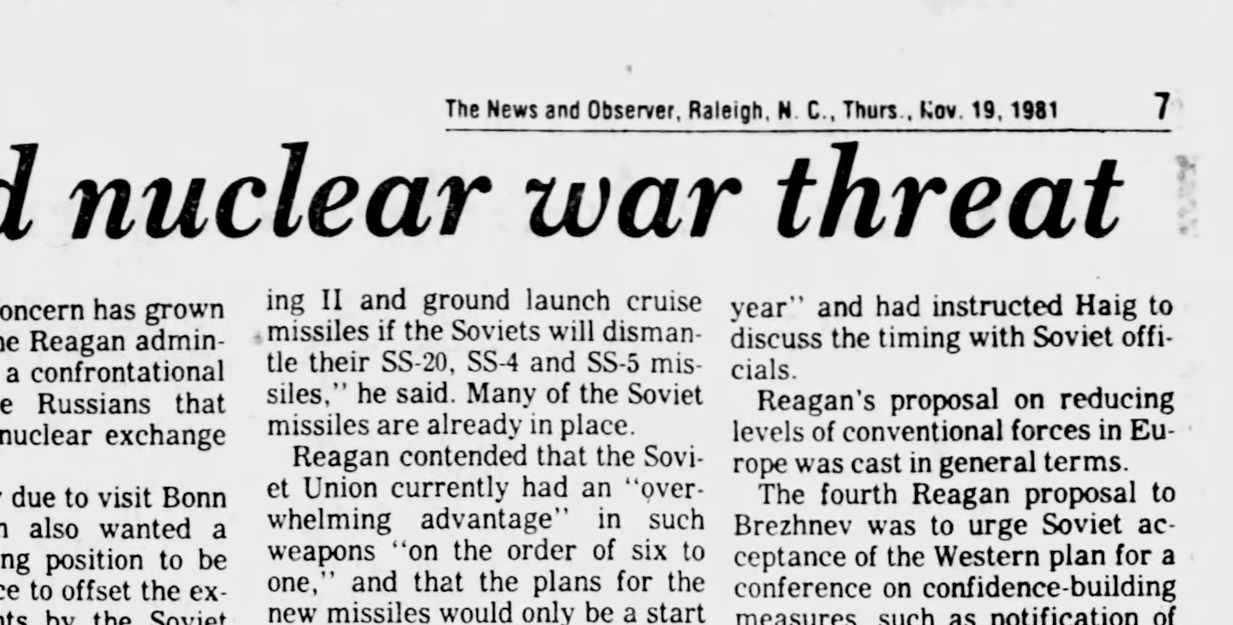

Both sides would've been better off if they had cooperated and agreed not to develop this technology further. But since they both acted in their own best interest, they ended up in a situation where everyone was worse off. This eventually led to a nuclear war threat.

The prisoner's dilemma is one of the most famous games in game theory. Thousands and thousands of papers have been published on versions of this game. In part, that's because it pops up everywhere.

Here is one such paper on this theory published as an article on nature.com which discusses the mathematics behind this dilemma in depth.

A visit to African Woodlands

Impalas living in between African woodlands and Savannahs are prone to catching ticks, which can lead to infectious diseases, paralysis, even death. So it's important for impalas to remove ticks and they do this by grooming, but they can't reach all the spots on their bodies and therefore they need another impala to groom them. Now, grooming someone else comes at a cost. It costs saliva, electrolytes, time and attention, all vital resources under the hot African sun where a predator could strike at any moment. So for the other impala, it would be best not to pay this cost, but then again, it too will need help grooming.

So all impalas face a choice: should they groom each other or not? In other words, should they cooperate or defect? Well, if they only interact once, then the rational solution is always to defect. That other impala is never gonna help you, so why bother? But the thing about a lot of problems is that they're not a single prisoner's dilemma. Impalas see each other day after day and the same situation keeps happening over and over again. So that changes the problem because instead of playing the prisoner's dilemma just once, you're now playing it many, many times.

If I defect now, then my opponent will know that I'd defected and they can use that against me in the future. So what is the best strategy in this repeated game ?

Strategies

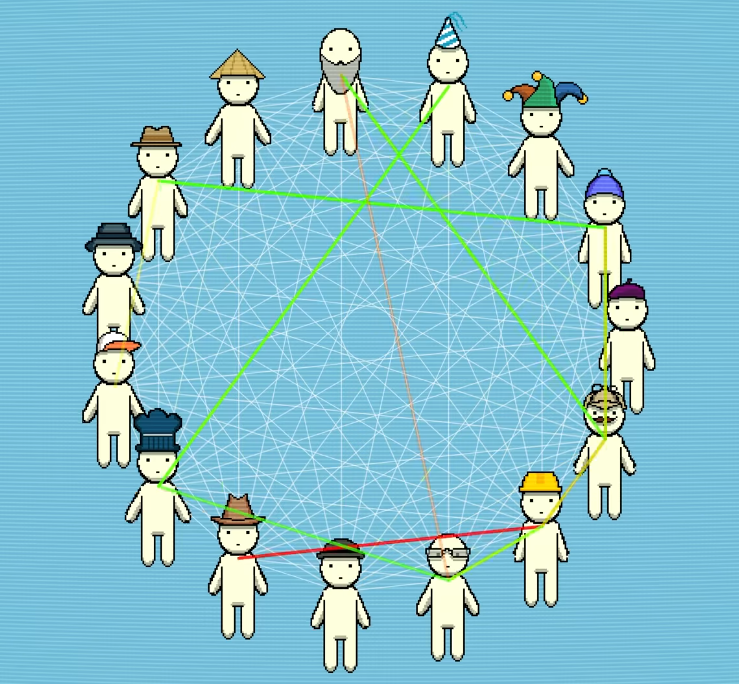

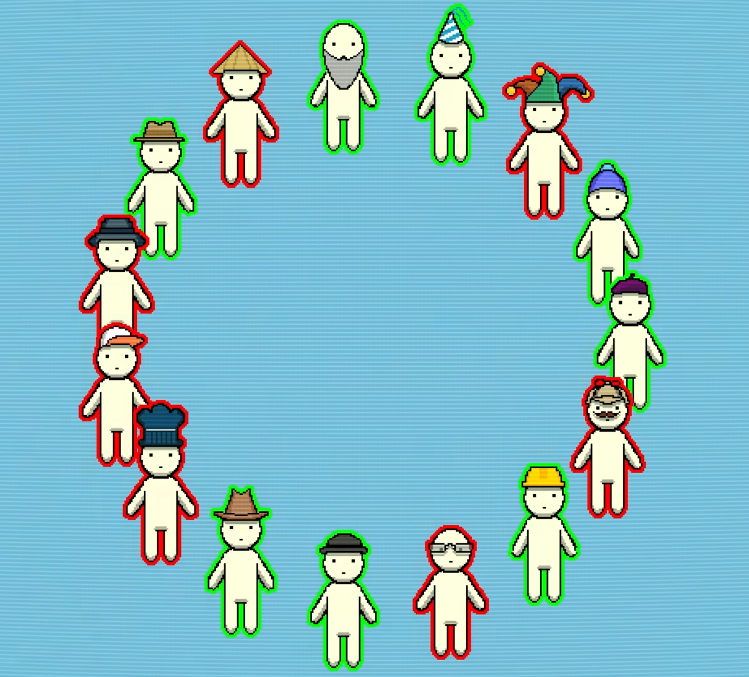

Robert Axelrod, a political scientist wanted to find out. So in 1980 he decided to hold a computer tournament. He invited some of the world's leading game theorists for many different subjects to submit computer programs that would play each other. - Axelrod called these programs strategies.

So what is the best strategy in this repeated game? That is what Each strategy would face off against every other strategy and against a copy of itself and each matchup would go for 200 rounds.

Now, Axelrod used points instead of coins, but the payoffs were the same. The goal of the tournament was to win as many points as possible and in the end, the whole tournament was repeated five times over to ensure the success was robust and not just a fluke.

Axelrod gave an example of a simple strategy. It would start each game by cooperating and only defect after its opponent had defected twice in a row.

In total Axelrod received 14 strategies and he added a 15th called random, which just randomly cooperates or defects 50% of the time.

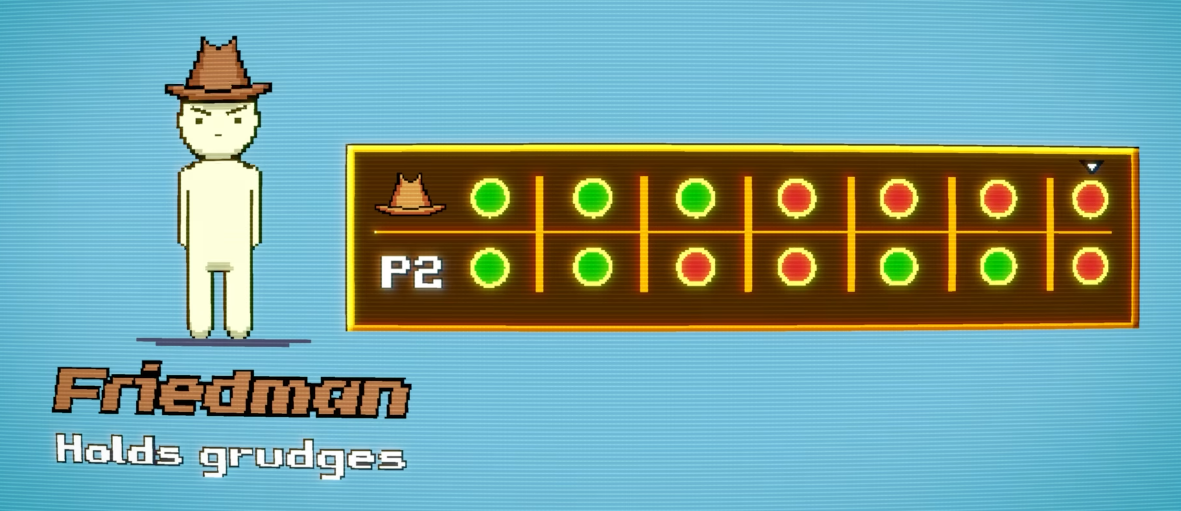

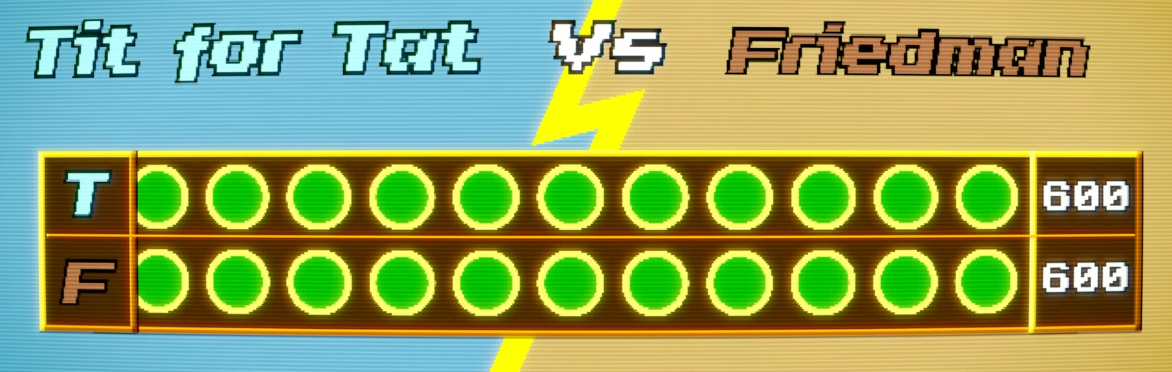

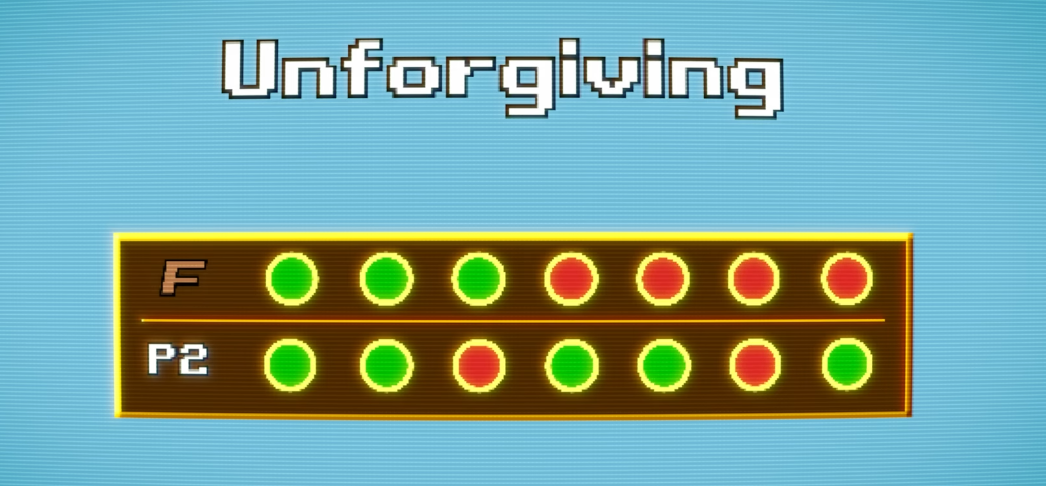

All strategies were loaded onto a single computer where they faced off against each other. One of the strategies was called Friedman. It starts off by cooperating, but if its opponent defects just once, it will keep defecting for the remainder of the game.

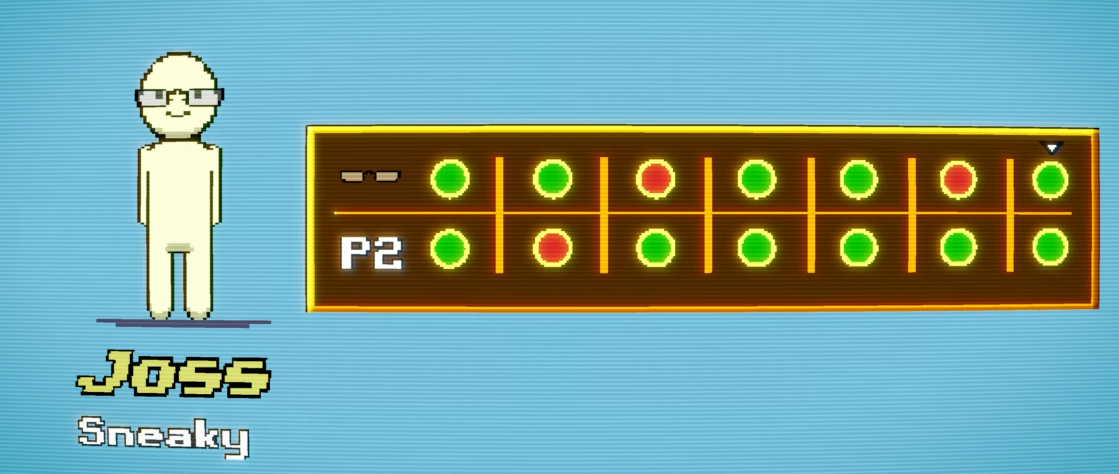

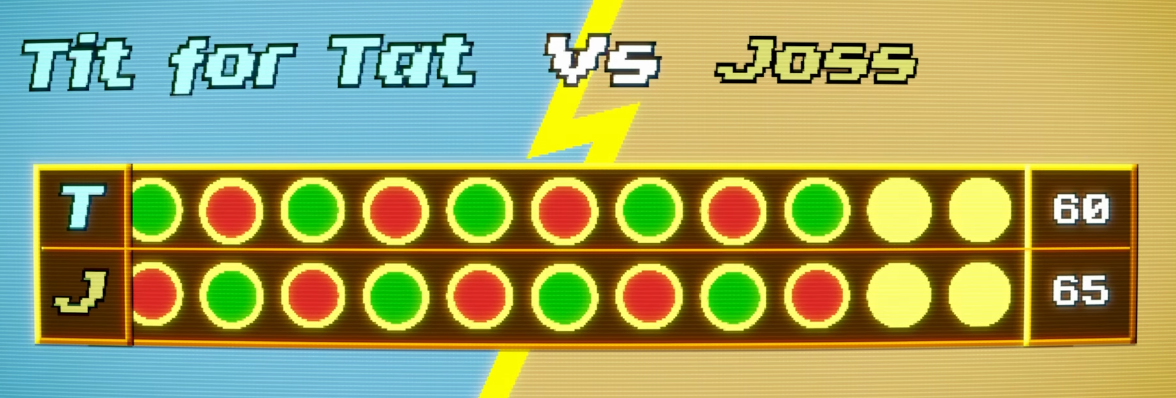

Another strategy was called Joss. It also starts by cooperating, but then it just copies what the other player did on the last move. Then around 10% of the time, Joss gets sneaky and defects.

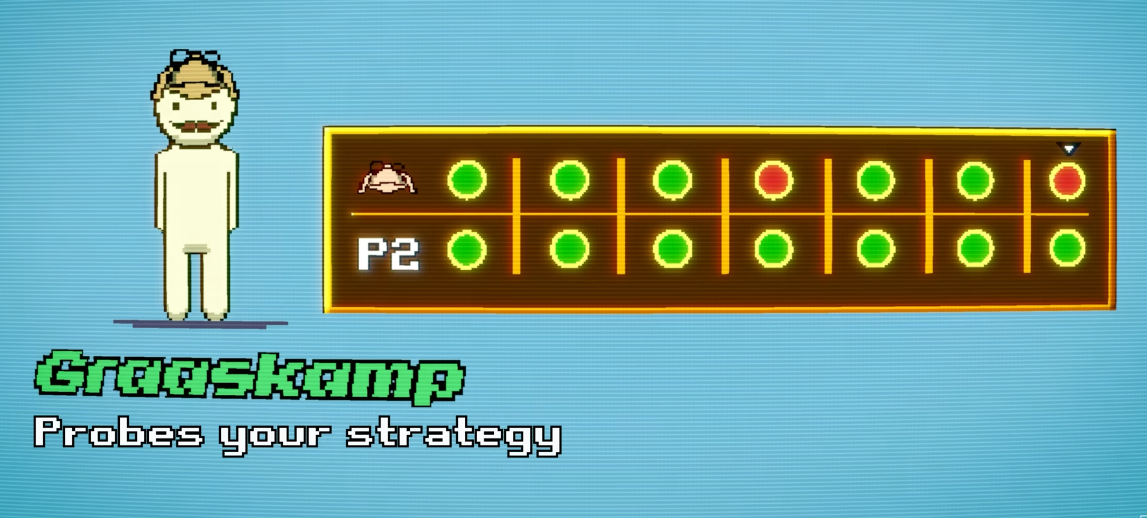

There was also a rather elaborate strategy called Graaskamp. This strategy works the same as Joss, but instead of defecting probabilistically, Graaskamp defects in the 50th round to try and probe the strategy of its opponent and see if it can take advantage of any weaknesses.

The most elaborate strategy was Name Withheld with 77 lines of code.

The winner

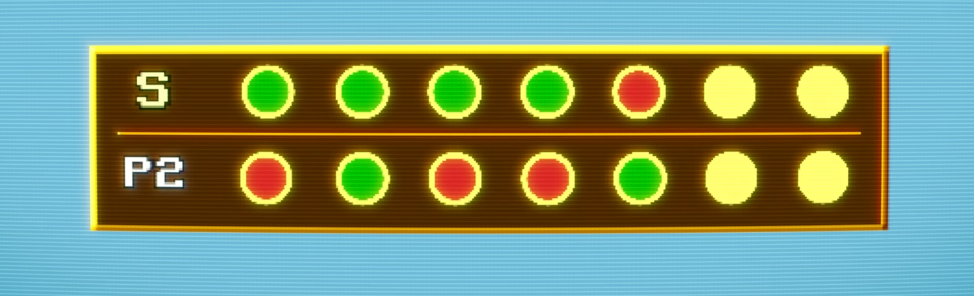

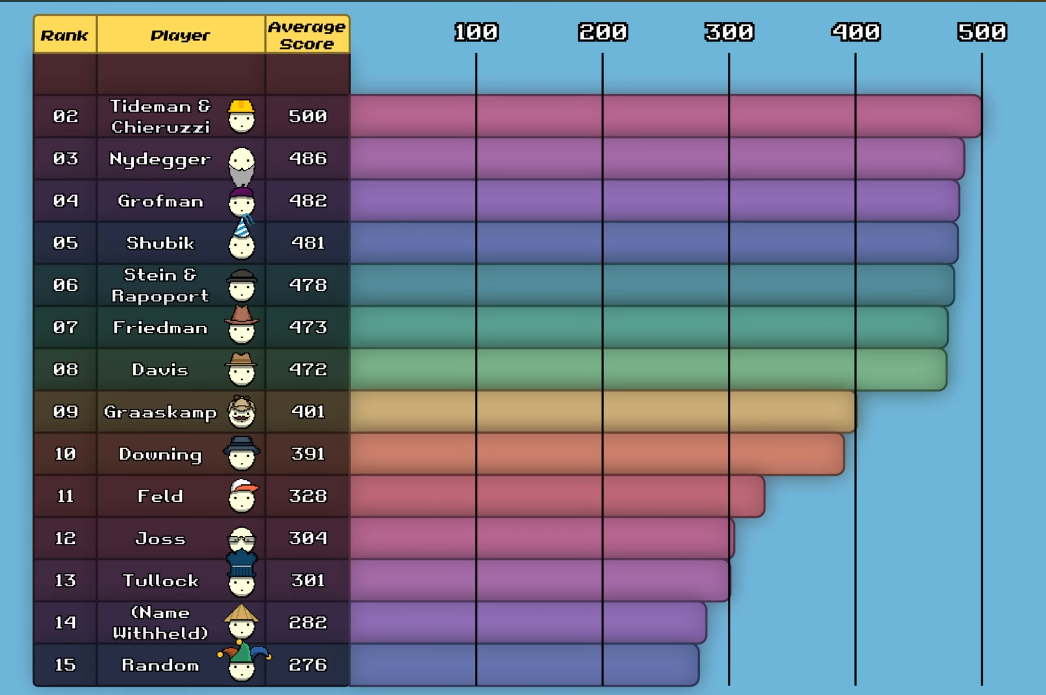

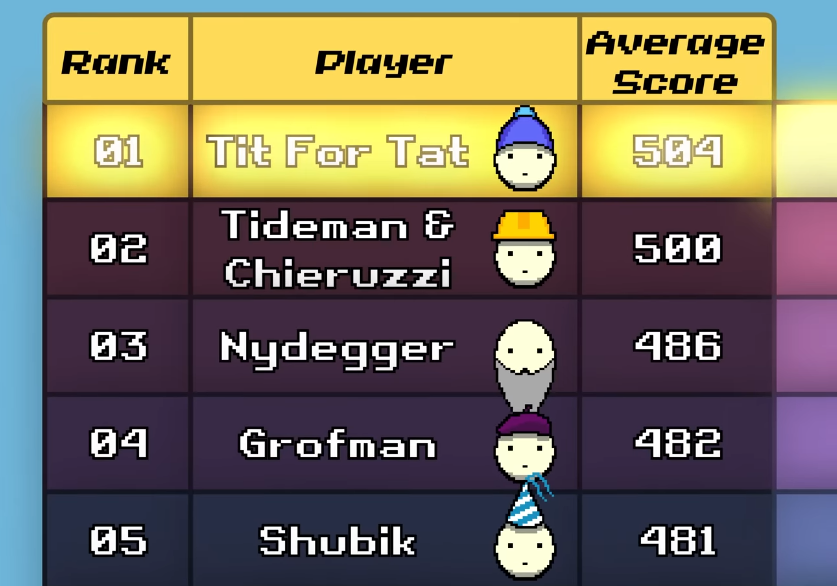

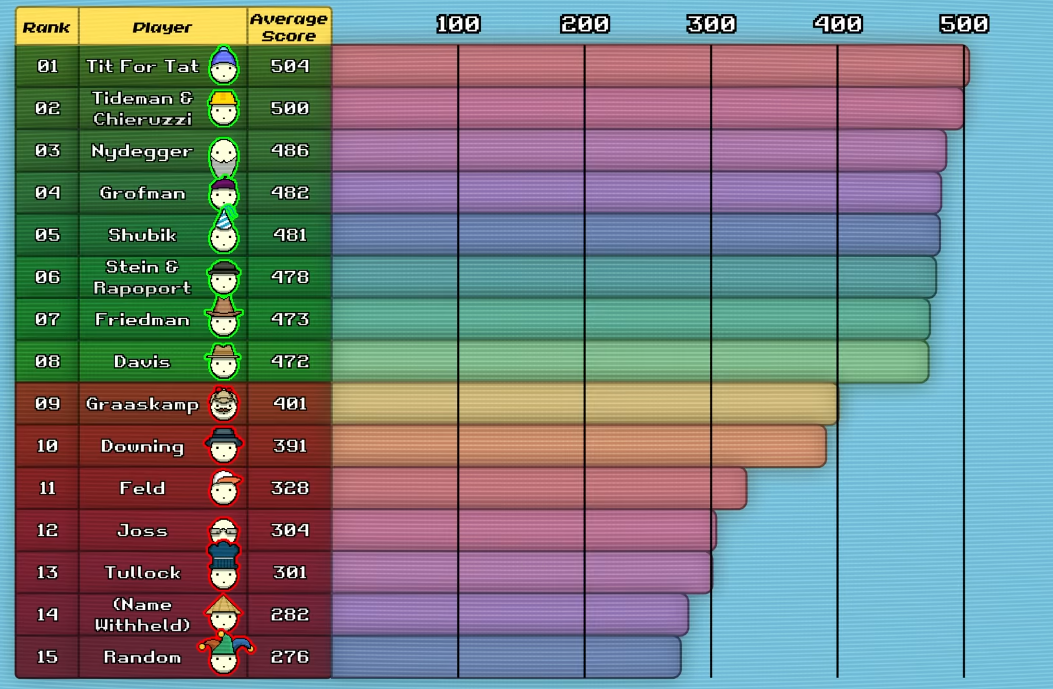

After all the games were played, the results were tallied up and the leaderboard established. The crazy thing was that the simplest program ended up winning, a program that came to be called Tit for Tat.

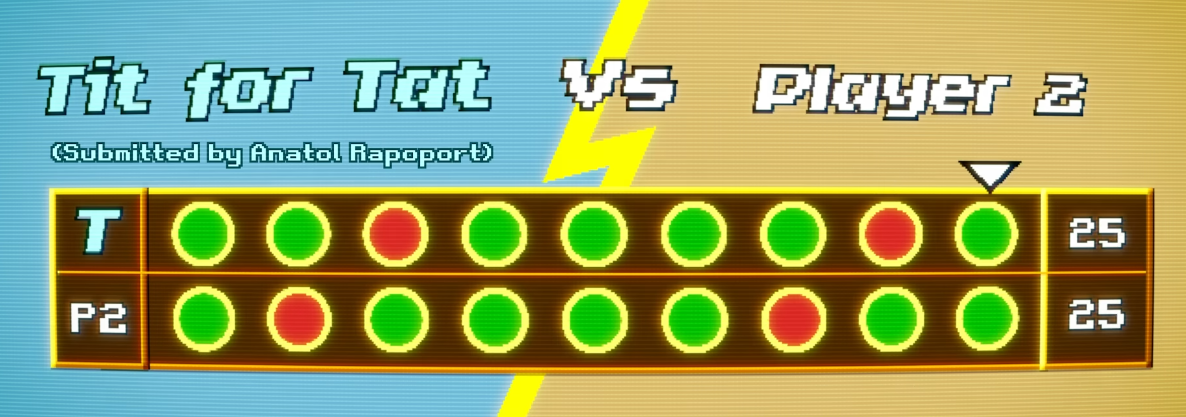

Tit for Tat starts by cooperating and then it copies exactly what its opponent did in the last move. So it would follow cooperation with cooperation and defection with defection, but only once if it's opponent goes back to cooperating. So does Tit for Tat.

When Tit for Tat played against Friedman, both started by cooperating and they kept cooperating both ending up with perfect scores for complete cooperation.

When Tit for Tat played against Joss, they too started by cooperating but then on the sixth move, Joss defected. This sparked a series of back and forth defections, a sort of echo effect.

So now we've got this alternating thing which will remind you of some of the politics of the world today where we have to do something to you because of what you did to us. And then when this weird program throws in a second unprovoked defection, now it's really bad because now both programs are gonna defect on each other for the rest of the game. And that's also like some of the things that we're seeing in politics today and in international relations.

As a result of these mutual retaliations, both Tit for Tat and Joss did poorly. But because Tit for Tat managed to cooperate with enough other strategies, it still won the tournament.

We imagined initially it'd be sort of like computer chess where you need a pretty complicated program to play a sophisticated game. But in fact it was not like that at all. It was the simplest strategy that did the best.

The Correlation

Axelrod found that all the best performing strategies, including Tit for Tat, shared four qualities.

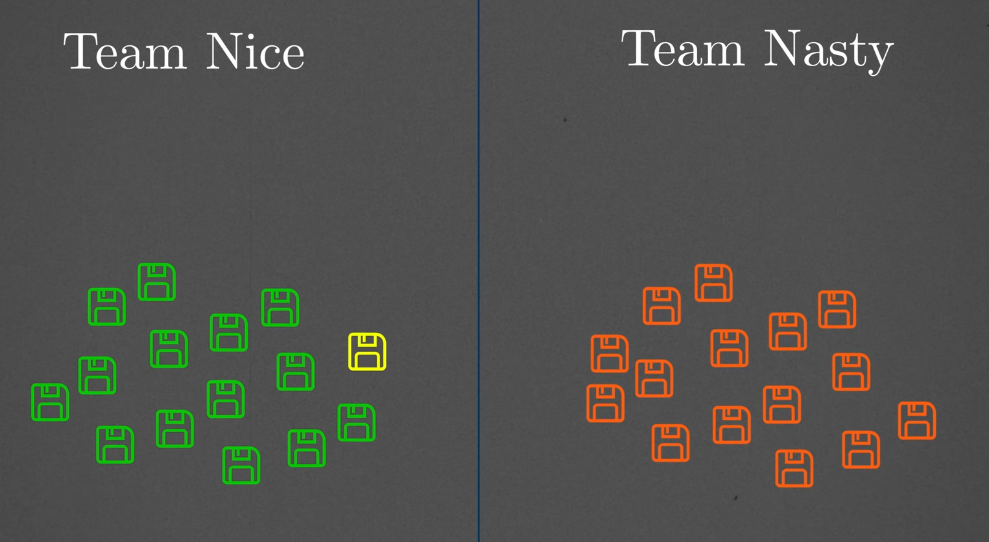

Nice

First, they were all nice, which just means they are not the first to defect. So Tit for Tat is a nice strategy, it can defect but only in retaliation.

The opposite of nice is nasty. That's a strategy that defects first. So Joss is nasty.

Out of the 15 strategies in the tournament, eight were nice and seven nasty.

The top eight strategies were all nice and even the worst performing nice strategy still far outscored the best performing nasty one.

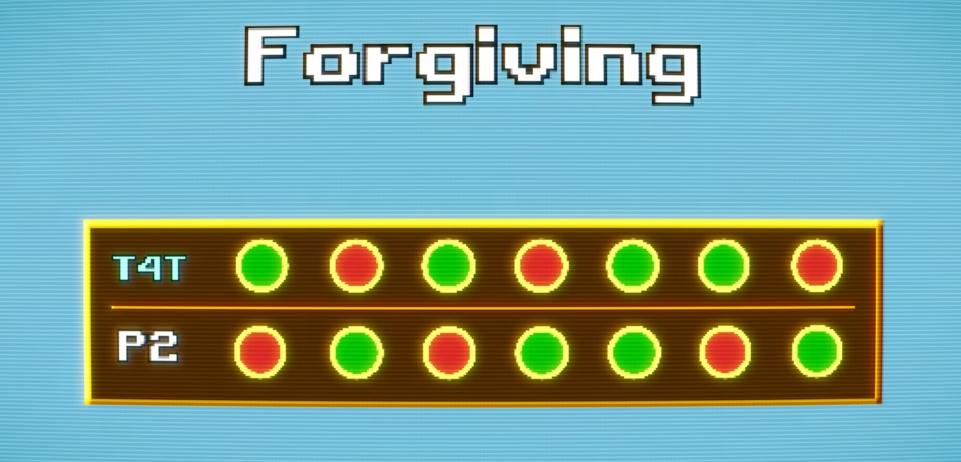

Forgiving

The second important quality was being forgiving. A forgiving strategy is one that can retaliate but it doesn't hold a grudge.

So Tit for Tat is a forgiving strategy. It retaliates when its opponent defects but it doesn't let affections from before the last round influence its current decisions.

Friedman on the other hand, is maximally unforgiving. After the first defection from the opponent would defect for the rest of the game. Okay, that's it - no mercy.

That might feel good to do but it doesn't end up working out well in the long run.

This conclusion that it pays to be nice and forgiving came as a shock to the experts. Many had tried to be tricky and create subtle nasty strategies to beat their opponent and take out an advantage, but they all failed. Instead, in this tournament, nice guys finished first.

Can we do better ?

Tit for Tat is quite forgiving but it's possible to be even more forgiving - the Sample Strategy.

Axelrod's sample strategy only defects after its opponent defected twice in a row. It was Tit for Two Tats.

Now that might sound overly generous, but when Axelrod ran the numbers, he found that if anyone had submitted the Sample strategy, they would've won the tournament.

Round 2

After Axelrod published his analysis of what happened or circulated it among these game theorists, he said - "now that we all know what worked well, let's try again".

He announced a second tournament where everything would be the same except for one change - the number of rounds per game.

The Change

In the first tournament, each game lasted precisely 200 rounds. And that is important because if you know when the last round is, then there's no reason to cooperate in that round. So you're better off defecting.

Of course your opponent should reason the same and so they should also defect in the last round. But if you both anticipate defection in the last round, then there's no reason for you to cooperate in the second to last round or the round before that, or before that and so on all the way to the very first round.

So in Axelrod's tournament, it was a very important thing that the players didn't know exactly how long they were gonna be playing. They knew on average it would be 200 rounds, but there was a random number generator that prevented them from knowing with certainty.

If you're not sure when it ends, then you have to kind of keep cooperating 'cause it might keep going and you need might need them on your side.

For this second tournament, Axelrod received 62 entries and again, added random. The contestants had gotten the results and analysis from the first tournament and could use this information to their advantage.

This created two camps. Some thought that clearly being nice and forgiving were winning qualities. So they submitted nice and forgiving strategies. One even submitted Tit for Two Tats. The second camp anticipated that others would be nice and extra forgiving and therefore they submitted nasty strategies to try to take advantage of those that were extra forgiving.

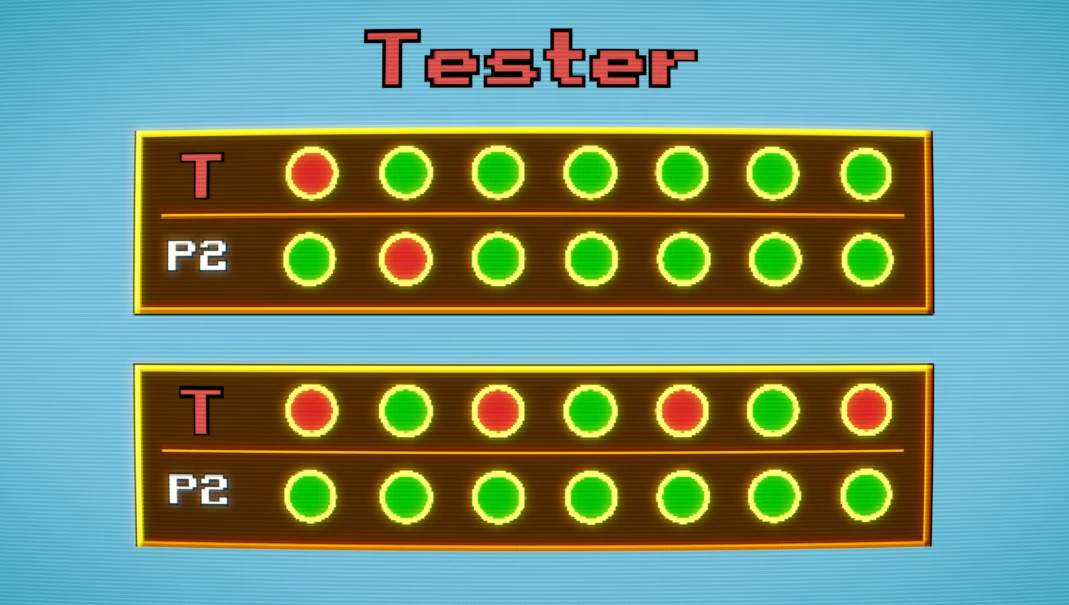

One such strategy was called Tester. It would defect on the first move to see how its opponent reacted. If it retaliated, tester would apologize and play Tit for Tat for the remainder of the game. If it didn't retaliate, tester would defect every other move after that.

But again, being nasty didn't pay. And once again, Tit for Tat was the most effective.

Nice strategies again did much better. In the top 15, only one was not nice.

Similarly, in the bottom 15, only one was not nasty.

The Other Qualities

After the second tournament, Axelrod identified the other qualities that distinguished the better performing strategies. The third is being retaliatory, which means if your opponent defects, strike back immediately, don't be a pushover.

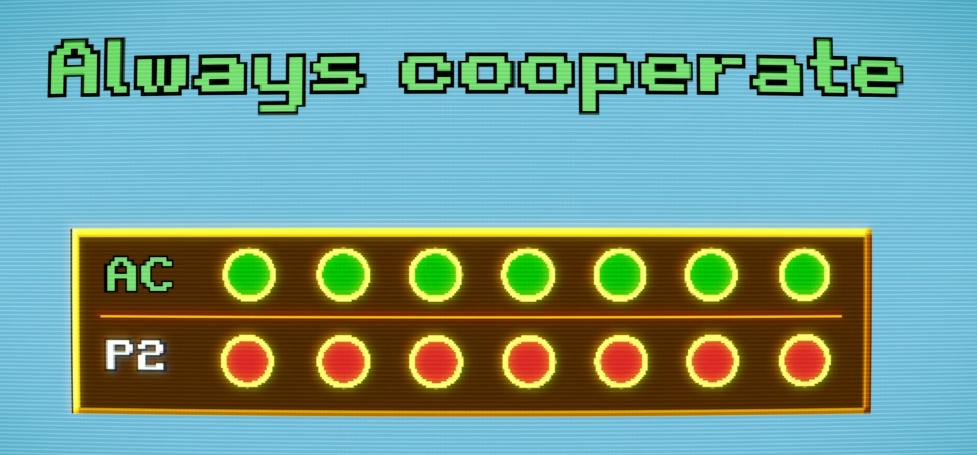

One strategy Always cooperate is a total pushover. And so it's very easy to take advantage of.

Tit for Tat, on the other hand, is very hard to take advantage of.

The last quality that Axelrod identified is being clear.

Programs that were too opaque, that were too similar to a random program, you couldn't figure them out because they were so complicated, it was very hard to establish any pattern of trust with a program like that because you couldn't figure out what it was doing.

The other programs it was playing couldn't figure them out and so they would end up more or less defaulting to thinking every turn is like the last time I'm gonna see you, so I might as well defect.

The takeaways

These four principles being nice, forgiving, provokable and clear is a lot like the morality that has evolved around the world that is often summarized as an eye for an eye.

What's interesting is that while Tit for Two Tats would've won the first tournament, it only came 24th in the second tournament. This highlights an important fact: in the repeated prisoner's dilemma, there is no single best strategy.

The strategy that performs best always depends on the other strategies it's interacting with. For example, if you put Tit for Tat in an environment with only the ultimate bullies of always defect, then Tit for Tat comes in last.

Tit for Tat did well because it did well with really stupid rules that didn't do well with it all themselves that basically it took advantage of people.

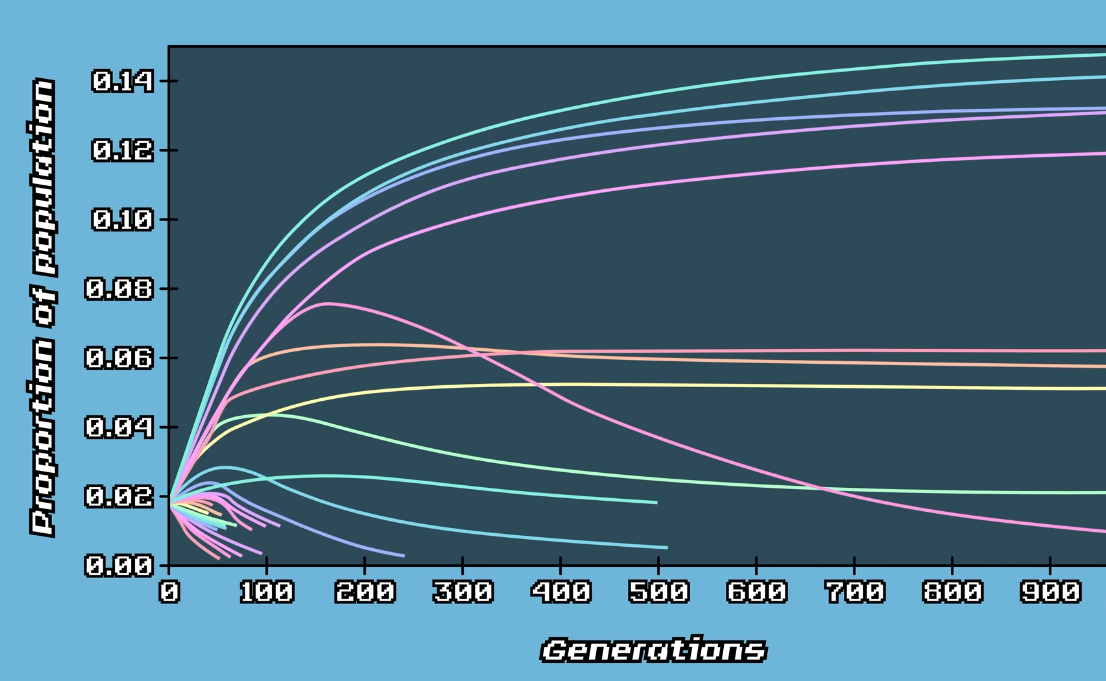

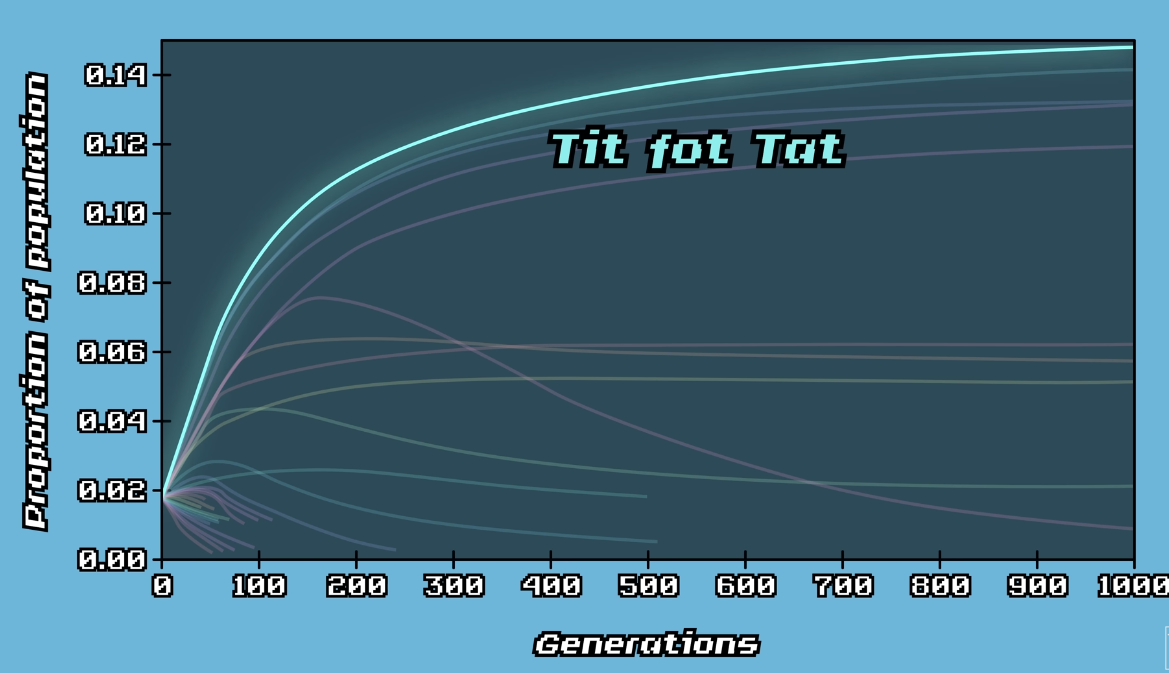

A simulation was run to test the results, where successful strategies in one generation would see their numbers grow and unsuccessful ones would see their numbers drop. In this simulation, the worst performing strategies quickly shrink and go extinct, while the top performing strategies become more common.

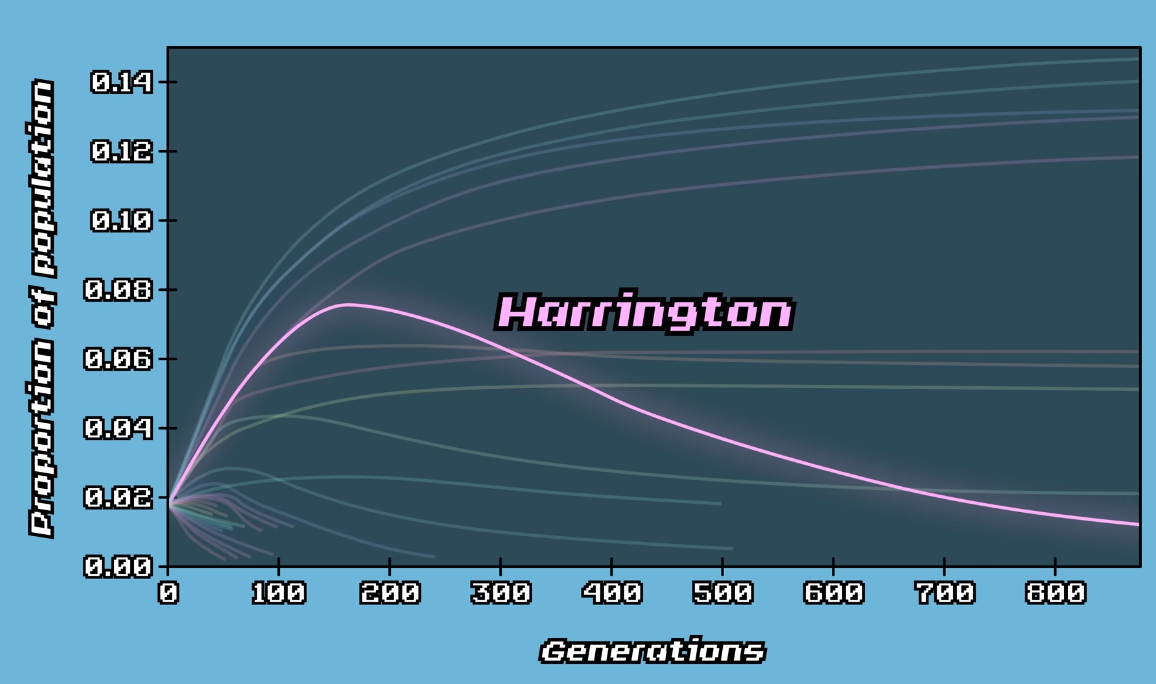

Harrington, the only nasty strategy in the top 15, first grew quickly, but then as the strategies it was preying on went extinct, Harrington's numbers also quickly dropped.

This shows a main benefit of this simulation because it tests how well a strategy does with other successful strategies. After a thousand generations, the proportions are mostly stable and only nice strategies survive.

Tit for Tat comes out on top, representing 14.5% of the total population.

Now this process may sound similar to evolution, but there is a subtle difference, which is that in this case there are no mutations. So it's actually an ecological simulation.

But what if the world you started in was different?

Imagine a world that is a really nasty place to live, more or less populated with players that always defect, except there's a little cluster of tit-for-tat players that live in some kind of nucleus and they get to play with each other a lot because they're geographically sequestered. They will start building up a lot of points, and also because that translates into offspring, they'll start to take over the population.

Axelrod showed that a little island of cooperation can emerge and spread and eventually will take over the world, which is fantastic. How can cooperation emerge in a population of players who are self-interested?

Who are not trying to be good because they're good-hearted. You don't have to be altruistic. You could be looking out for number one for yourself and your own interests. And yet cooperation can still emerge.

Some argue that this could explain how we went from a world full of completely selfish organisms where every organism only cared about themselves to one where cooperation emerged and flourished. From impalas grooming each other to fish cleaning sharks. Many life forms experience conflicts similar to the prisoner's dilemma, but because they don't interact just once, both can be better off by cooperating.

This doesn't require trust or conscious thought either because the strategy could be encoded in DNA, as long as it performs better than the other strategies, it can take over a population.

Axelrod's insights were applied to areas like evolutionary biology and international conflicts, but there was one aspect that his original tournaments didn't cover. What happens if there is a little bit of random error in the game?

Presence of an error

Consider some noise in the system. For example, one player tries to cooperate, but it comes across as a defection. Little errors like this happen in the real world all the time.

Like in 1983, the Soviet satellite-based early warning system detected the launch of an intercontinental ballistic missile from the US but the US hadn't launched anything. The Soviet system had confused sunlight reflecting off high altitude clouds with a ballistic missile. Thankfully, Stanislav Petrov, the Soviet officer on duty, dismissed the alarm. This example shows the potential costs of a signal error and the importance of studying the effects of noise on those strategies.

The word game sounds like it's a children's game or, there's some something, a misnomer maybe in calling it game theory because these are life and death matters obviously. As it came up in the cold war, it could actually be life and death of the whole planet - in the whole we could annihilate human civilization.

These are not games in any kind of trivial sense, it's just the term that is used by mathematicians and theorists.

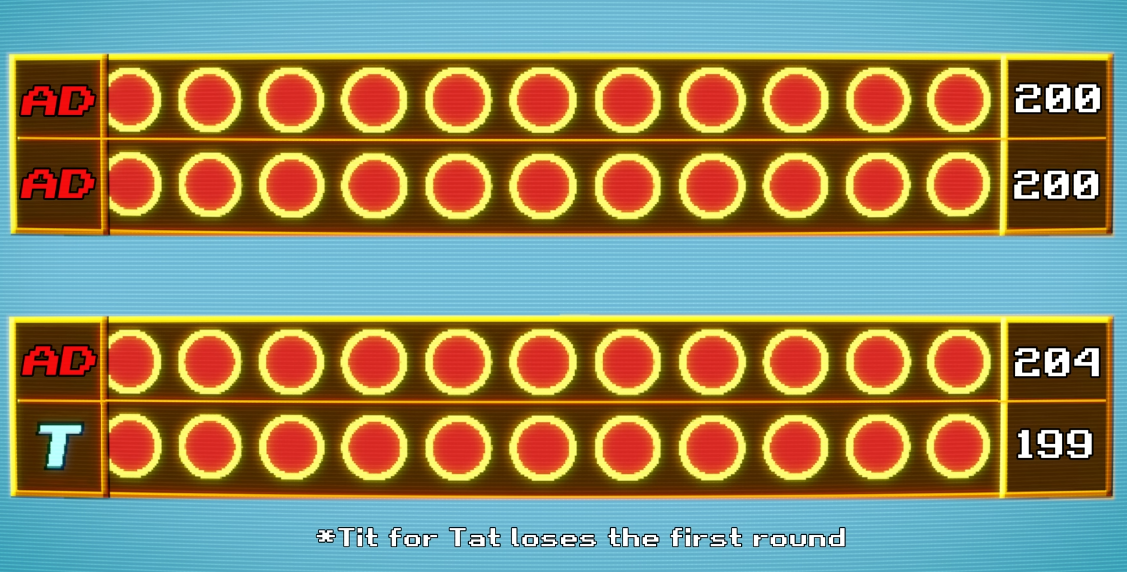

When Tit for Tat plays against itself in a noisy environment, both start off by cooperating, but if a single cooperation is perceived as a defection, then the other Tit for Tat retaliates and it sets off a chain of alternating retaliations. And if another cooperation is perceived as a defection, then the rest of the game is constant mutual defection. Therefore, in the long run, both would only get a third of the points they would get in a perfect environment.

Tit for Tat goes from performing very well to performing poorly.

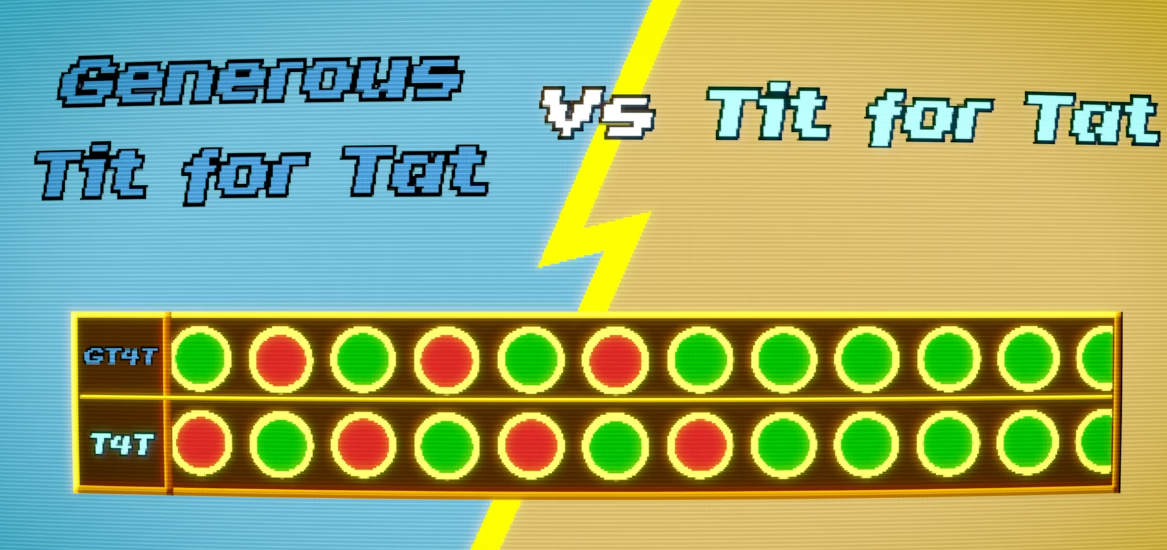

So how do we solve this ? Well, we need a reliable way to break out of these echo effects. And one way to do this is by playing Tit for Tat, but with around 10% more forgiveness. So instead of retaliating after every defection, you only retaliate around nine out of every 10 times.

This helps to break out of those echoes while still being retaliatory enough to not be taken advantage of.

The big Misconception

Tit for Tat does really well, but it could never do better than the player it's playing with.

By design, all the strategies can do is lose or draw with noise. And yet when the results of all interactions are tallied up, they come out ahead of all other strategies. Similarly, always defect can never lose a game. It can only draw or win, but overall, it performs extremely poorly.

This highlights a common misconception because for many people when they think about winning, they think they need to beat the other person. In games like chess or poker, this is true since one person's gain is necessarily another person's loss, so these games are zero sum.

But most of life is not zero sum. To win, you don't need to get your reward from the other player. Instead, you can get it from the banker. Only in real life, the banker is the world. It is literally everything around you. It is just up to us to find those win-win situations, and then work together to unlock those rewards.

Cooperation pays even among rivals. From 1950 to 1986, the US and Soviet Union had trouble cooperating and both kept developing nukes. But then from the late '80s onwards, they started reducing their nuclear stockpiles.

They too had learned how to resolve conflict. Rather than making an agreement to abolish all nuclear arms at once and essentially turning it into a single prisoner's dilemma, they would disarm slowly, a small number of nukes each year and then they'd check each other to see that they had both cooperated and then repeat the year after, and the year after that. All along, checking to ensure mutual cooperation.

In the more than 40 years since Axelrod's tournaments, researchers have continued to study which strategies perform best in a variety of environments. In doing so, they varied everything from payoff structures to strategies to errors and more. Some even allowed the strategies to mutate while Tit for Tat or generous Tit for Tat doesn't always come out on top, Axelrod's main takeaways still hold: be nice, forgiving, but don't be a pushover.

One of the main things that sets life apart from non-living things that life gets to make decisions. We get to make choices. Choices that don't only change our future, but also the future of those we interact with.

In the short term, it is often the environment that shapes the player that determines who does well. But in the long run, it is the players that shape the environment.

So, let's play a game, the game of life, and make your choices wisely because their impact may reach further than you think.

This blog was inspired from Vertasium's video on prisoner's dilemma.